Taming the AI Wild West

Before generative AI can be successfully adopted by enterprises on a massive scale, the liability, cost, regulatory risk, and sustainability aspects must all be carefully considered. Pipeline recently had the opportunity to meet with Madison Gooch, director of Watson and AI Solutions at IBM to discuss these consideration and how generative AI is being used today.

Capitalizing on Enterprise-grade Generative AI

How quickly businesses can capitalize on generative AI depends not only on the projected ROI, but also on competitive factors—such as productivity—that will accelerate the adoption for enterprises and their stakeholders. According to IBM, businesses are already seeing legitimate returns from enterprise-grade generative AI investments for real-world use cases. For example, enterprises are overlaying conversational interfaces on top of business and policy documentation, to improve productivity across customer, customer care, and employee interactions. Summarization is also being widely used by knowledge workers grappling with massive amounts of audio and unstructured data, such as recordings, legal discovery documents, medical transcripts, research documents, or sales meeting notes. Generative AI is being used to both summarize this information and to automatically propagate the relevant bits into other systems. And then there’s the generative aspect. Businesses today are using generative AI to help develop a range of content—from legal briefs to educational materials to medical reports—helping workers generate near-final content in a fraction of the time. As businesses see processes streamlined and productivity increased, generative AI can be extended to a wider range of use cases. But this will only be possible by having an enterprise-grade generative AI framework with the appropriate parameters in place.

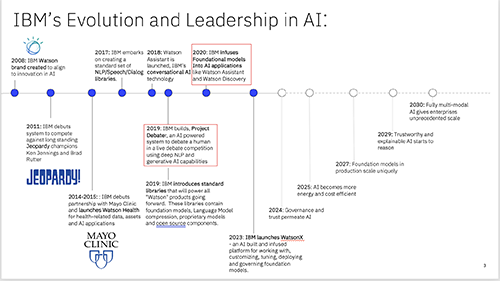

(click here to enlarge)

Picking the Right Generative AI Model

When it comes to addressing governance, choosing the right model before deploying generative AI at scale is key. IBM has been the frontrunner in machine-learning for decades and its investment in

AI has been rapidly accelerating in recent years (see Figure 1, above). IBM has been dedicated to creating responsible AI from the very beginning—from the evolution of machine learning to

deep learning, dovetailing into AI and now generative AI. IBM's innovation has pioneered the way for the foundation models we recognize as generative AI today. Their contributions have also

provided the foundation for their own offerings—including IBM’s Watson and watsonx platforms—as well as

invaluable contributions to the open-source community.

IBM’s AI evolution has been governed by a deep commitment to compliance, governance, and the ethical use of AI. Gooch says this commitment permeates every facet of their approach and underpins IBM’s belief that pure input results in ethical output. Using a model that relies on private, proprietary, and vetted third-party training data that undergoes rigorous certification, IBM preserves data integrity and ensures reliable generative AI results. Unlike generative AI driven by large language models that rely upon public or unverifiable sources, IBM’s model also provides transparency and auditable models that can trace the lineage of data sources and instill