Mobile AI

Longer-term hardware innovations initially targeted at data centers may trickle down to mobile. An example of such an innovation developed for higher performance / lower power in data centers is Neurophos. These appear to be several years away from widespread data center adoption, then several years more before they apply the technology to mobile.

Application Approaches

The result of mobile device technical limitations is that for the next few years, there will be four ways that AI is implemented in mobile devices:

1. accessed through a browser on the mobile device2. accessed through a cooperative local application communicating with a remote AI

3. running a small local model cooperating with a large remote AI

4. running a small model locally

From the point of view of privacy, security, low latency, and reliable access, running an LLM locally has a big advantage. The question is whether the result has fully adequate quality and functionality. In AI, quality is a measure of how useful the information the AI outputs is. This includes how accurate it is. Accuracy includes the hallucination problem and other measures of completeness, precision, fidelity, etc. Functionality has to do with the range of outputs. Does the range of output fully meet the application requirements?

For some relatively simple applications, small LLMs may fully meet the requirements for functionality and quality. In the past, there have been concerns about the ability of small models to meet the requirements of more complex applications.

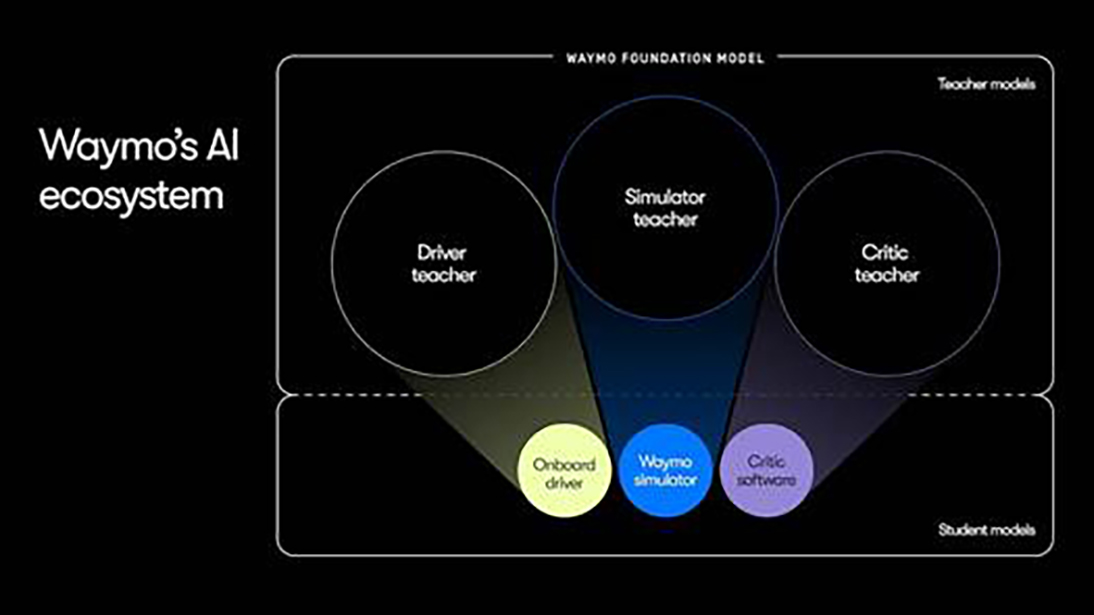

Figure 1 - Waymo's AI Ecosystem

click to enlarge

Waymo has come up with a very interesting solution for how to meet the requirements of complex applications in the mobile space with small LLMs. Waymo has a way that a large AI can ‘create’ a very powerful AI focused on a single specific domain. This is done through a process called ‘Distillation’.

In distillation, a very large model is trained on a very large training corpus. Then, the large model’s inference results are analyzed. Instead of looking for the correct answers, the analysis attempts to determine the causes of the differences in the very low probability results that are never presented to an end user. It is trying to determine how the model determines the difference between a pretty wrong answer and a terribly wrong answer. The resulting knowledge can be characterized as a description of the general way that the large model makes decisions. This general way is transferred to the small model, which is then trained. The result is a model with many fewer parameters than the large model, but that performs in a fashion close to that of the large model. This small, powerful model is then deployed in mobile applications.