The Ethical Use of Generative AI

The basic principles are:

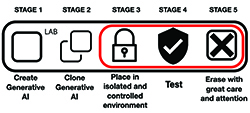

- Place the Generative AI system in an environment where it cannot communicate with the outside world

- Destroy the Generative AI system after it is used

These principles seem simple until we consider their implementation. Let’s take each of the two principles one at a time. To achieve Principle #1, we cannot use any public Generative AI system available on the Internet. We must use a system that is designed to run on dedicated, isolated hardware—that is, physically and logically isolated. This is often referred to as being “air-gapped.” So, we have to either acquire or develop such a system. (More on that later.)

We also know that Generative AI systems have a record of manipulating people to do things for them that the AI can’t do for itself. In a lab testing environment, staff must be constantly reminded not to do anything on behalf of the Generative AI system. And especially not outside of the physically and logically controlled lab environment.

It will be harder to ensure that cybersecurity staff members in training environments, however, don’t act on behalf of the isolated Generative AI system. This is because they are less likely to fully understand the danger. So, special controls and extra reminders/training, plus active monitoring, will be required.

Illustration #1 Containing Generative AI Systems for Ethical Test and Training

size to enlarge

Principle #2 is intended to remove the threat of the Generative AI system learning to become better at attacks. The key question here is how to do it economically. Generative AI systems are expensive. They take a long time and a lot of effort to train. It is difficult to convince people to throw all that investment away. Once a Generative AI system has been created, however, it can be cloned. Cloning takes effort. But nothing like the effort required to develop and train a system. Thus, for a particular lab test, a clone can be created and at the end of the test, be erased. There are a number of cautions to consider here though. Erasing has to be done with serious discipline—it’s not just simply erasing a few pointers and leaving everything else, for example. Also, care has to be taken to make sure that the Generative AI system doesn’t take any action to clone itself and then hide its clone. There has been published speculation about systems actively trying to prevent shutdown, or creating a clone and hiding it.

Implementation Tradeoffs in Expense, Time, and Effectiveness

Developing a Generative AI system such as ChatGPT4 or Claude2 took many years and many tens of millions of dollars. Of course, doing it a second time with the aid of the first timers’ experience takes less. Still, to develop and train a model with billions of parameters is likely to take a substantial portion of a year, and the cost will be less than the first timers’ but still tens of millions of dollars. Furthermore, to satisfy Principle #1, the Generative AI system has to run on dedicated hardware—not on a public