The Race to Build AI-optimized Data Center Networks

By: Aniket Khosla

It’s hard to believe that less than two years ago most of the tech industry considered artificial intelligence (AI) a niche topic. A fascinating and important topic, but one discussed in mostly future-focused terms. Fast forward to mid-2024, and the future is now. Practically every solution area in tech now includes a strategy for incorporating generative AI (GenAI) or other AI-enabled applications. And businesses in every industry are racing to put AI to work — automating operations, lowering costs, improving quality, and more.

All this enthusiasm can make it seem like the transformative potential of AI is practically limitless. Inside the world’s largest data centers, however, where AI application and training workloads run, the excitement is tempered by questions about how, exactly, operators can keep up with exploding demand. As AI workloads grow larger and more complex, how many processors and accelerators will AI clusters require, and how can data center fabrics best interconnect them? How will data center architectures meet the exacting throughput, latency, and lossless transmission requirements of real-time AI applications? What types of interfaces are best suited for front- and back-end infrastructures, and when should operators expect to need next-generation interfaces?

Many of these questions don’t yet have firm answers. However, by examining the requirements for processing AI application workloads and the ways these requirements are influencing data center network designs, we can start to get a clearer picture.

AI Puts the Squeeze on Data Center Architectures

According to Dell’Oro Group, global AI application traffic is increasing by a factor of 10x every two years. Hyperscale cloud providers are already approaching terabit networking thresholds for AI workloads, and other data center operators aren’t far behind. As the effects of AI adoption ripple throughout the communications industry, hyperscalers are adding bandwidth and compute infrastructure as quickly as vendors can deliver it.

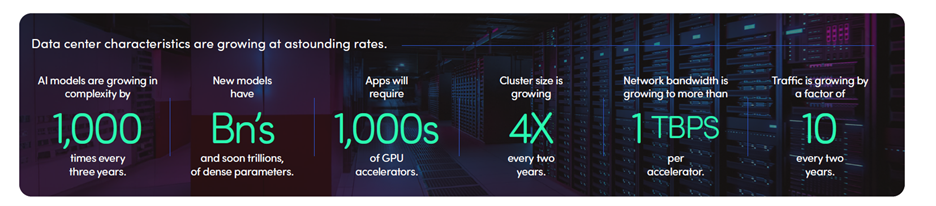

There’s a reason for this accelerating infrastructure investment: AI applications bring requirements on a totally different scale than traditional data center workloads. (Figure 1) These requirements are growing for both AI model training (when an application ingests vast amounts of data to train its algorithm on) and inferencing (when the AI model puts this training to work on new data).

The scale of the networking fabric needed for a given AI cluster depends on the size and complexity of the applications that infrastructure supports. But with AI models growing 1,000x more complex every three years, we should expect that in the near future, clusters will need to support models with trillions of dense parameters. In practical terms, this will require a fabric connecting thousands, even tens of thousands of central processing units (CPUs), graphics processing units (GPUs), field programmable gate arrays (FPGAs), and other “xPU” accelerators.

Figure 1. AI impact on data centers, by the numbers. (Source: Dell'Oro Group)

These requirements won’t have much immediate effect on data center front-end access networks used to ingest data for AI algorithm training. Back-end infrastructures, however, are another matter. Here, operators can’t meet exploding AI workload demands by simply throwing more hardware at the problem. They need separate, scalable, routable back-end infrastructures designed explicitly to interconnect xPUs for AI training and inferencing.

Evolving Infrastructure Requirements

New back-end infrastructures needed to support AI applications have very different, far more demanding performance requirements than traditional data center networks. To avoid bottlenecks in data transfers among compute and accelerator nodes, back-end